In a Jan 2024 survey by Everest Group, 68% of CIOs pointed out budget concerns as a major hurdle in kickstarting or scaling their generative AI investments. Just like estimating costs for legacy software, getting the budget right is crucial for generative AI projects. Misjudging estimates can lead to significant time loss and complications with resource management.

Before diving in, it’s essential to ask: Is it worth making generative AI investments now, despite the risks and the ever-changing landscape, or should we wait?

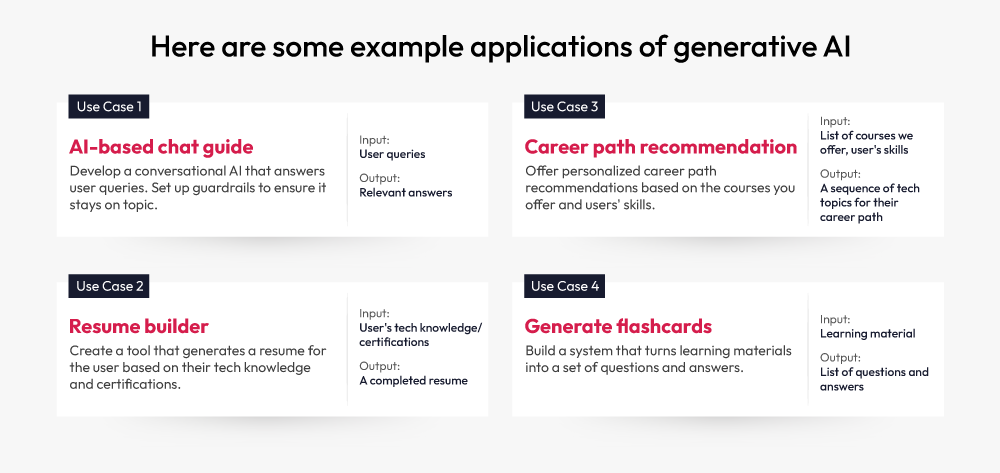

Simple answer: Decide based on risk and the ease of implementation. It’s evident that generative AI is going to disrupt numerous industries. This technology isn’t just about doing things faster; it’s about opening new doors in product development, customer engagement, and internal operations. When we speak with tech leaders, they tell us about the number of use cases pitched by their teams. However, identifying the most promising generative AI idea to pursue can be a maze in itself.

This blog presents a practical approach to estimating the cost of generative AI projects. We’ll walk you through picking the right use cases, LLM providers, pricing models and calculations. The goal is to guide you through the GenAI journey from dream to reality.

Choosing Large Language Models (LLMs)

When selecting an LLM, the main concern is budget. LLMs can be quite expensive, so choosing one that fits your budget is essential. One factor to consider is the number of parameters in the LLM. Why does this matter? Well, the number of parameters provides an estimate of both the cost and the speed of the model’s performance. Generally, more parameters mean higher costs and slower processing times. However, it’s important to note that a model’s speed and performance are influenced by various factors beyond just the number of parameters. However, for this article’s purpose, consider that it provides a basic estimate of what a model can do.

Types of LLMs

There are three main types of LLMs: encoder-only, encoder-decoder, and decoder-only.

- Encoder-only model: This model only uses an encoder, which takes in and classifies input text. It was primarily trained to predict missing or “masked” words within the text and for next sentence prediction.

- Encoder-decoder model: These models first encode the input text (like encoder-only models) and then generate or decode a response based on the now encoded inputs. They can be used for text generation and comprehension tasks, making them useful for translation.

- Decoder-only model: These models are used solely to generate the next word or token based on a given prompt. They are simpler to train and are best suited for text-generation tasks. Models like GPT, Mistral, and LLaMa fall into this category. Typically, if your project involves generating text, decoder-only models are your best bet.

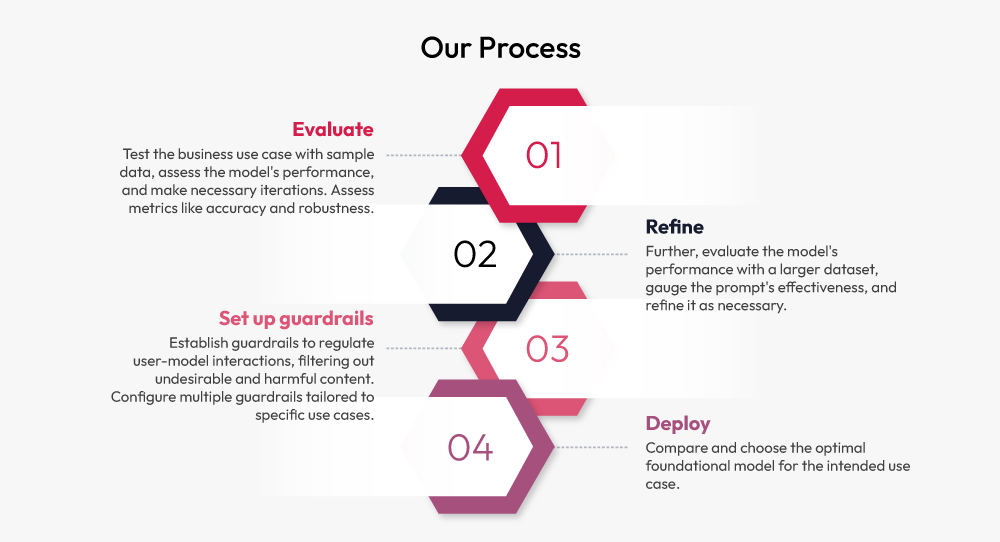

Our implementation approach

At Robosoft, we’ve developed an approach to solving client problems. We carefully choose models tailored to the use case, considering users, their needs, and how to shape interactions. Then, we create a benchmark, including cost estimates. We compare four or five models, analyze the results, and select the top one or two that stand out. Afterward, we fine-tune the chosen model to match clients’ preferences. It’s a complex process, not simple math, but we use data to understand and solve the problem.

Where to start?

Start with smaller, low-risk projects that help your team learn or boost productivity. Generative AI relies heavily on good data quality and diversity. So, strengthen your data infrastructure by kicking off smaller projects now, ensuring readiness for bigger AI tasks later.

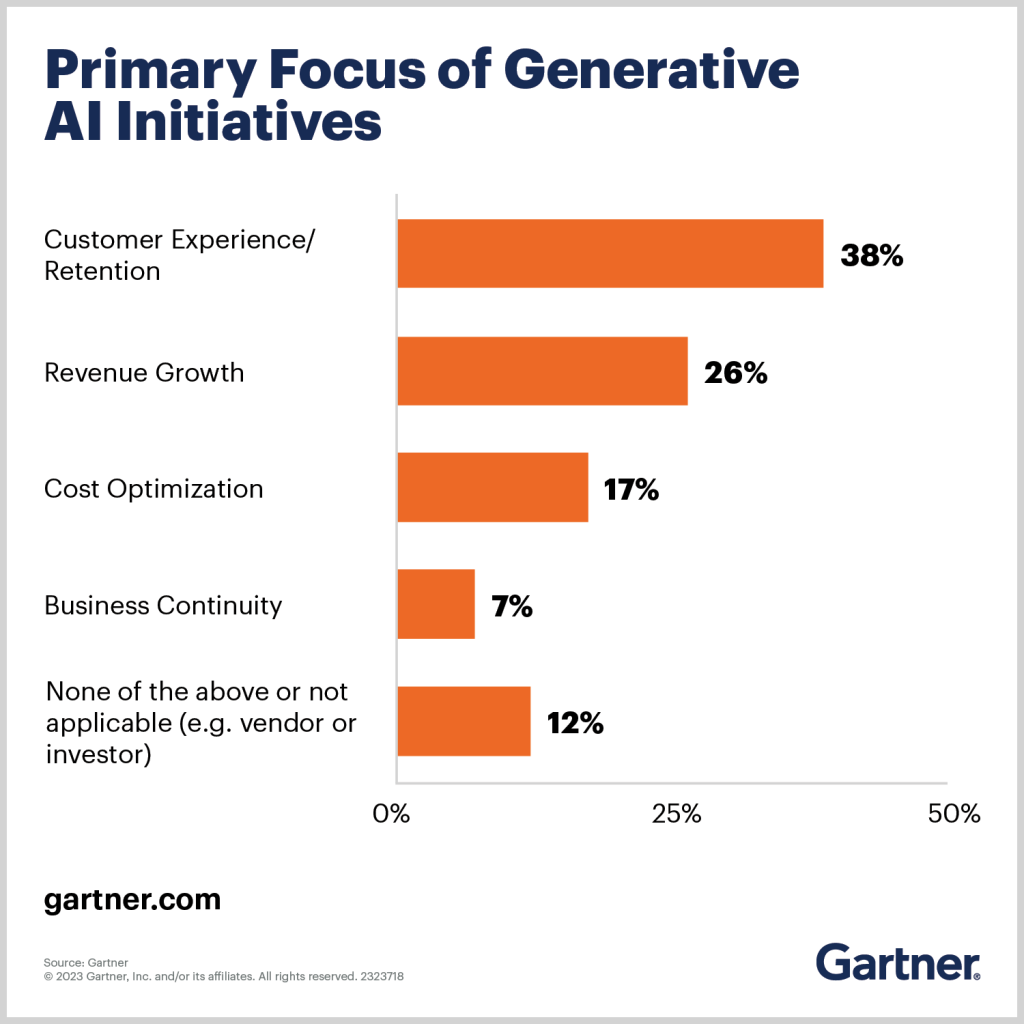

In a recent Gartner survey of over 2,500 executives, 38% reported that their primary goal for investing in generative AI is to enhance customer experience and retention. Following this, 26% aimed for revenue growth, 17% focused on cost optimization, and 7% prioritized business continuity.

Begin with these kinds of smaller projects. It will help you get your feet wet with generative AI while keeping risks low and setting you up for bigger things in the future.

Begin with these kinds of smaller projects. It will help you get your feet wet with generative AI while keeping risks low and setting you up for bigger things in the future.

Different methods of implementing GenAI

There are several methods for implementing GenAI, including RAG, Zero Shot, One Shot, and Fine Tuning. These are effective strategies that can be applied independently or combined to enhance LLM performance based on task specifics, data availability, and resources. Consider them as essential tools in your toolkit. Depending on the specific problem you’re tackling, you can select the most fitting method for the task at hand.

- Zero shot and One shot: These are prompt engineering approaches. The zero-shot approach involves the model making predictions without prior examples or training on the specific task, suitable for simple, general tasks relying on pre-trained knowledge. One Shot involves the model learning from a single example or prompt before making predictions, which is ideal for tasks where a single example can significantly improve performance.

- Fine tuning: This approach further trains the model on a specific dataset to adapt it to a particular task. It is necessary for complex tasks requiring domain-specific knowledge or high accuracy. Fine tuning incurs higher costs due to the need for additional computational power and training tokens.

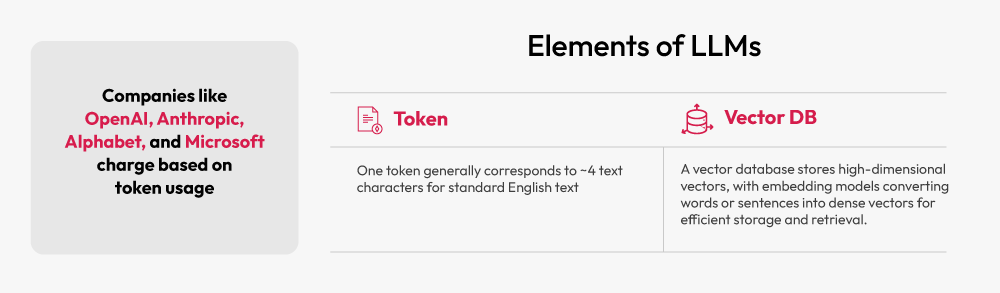

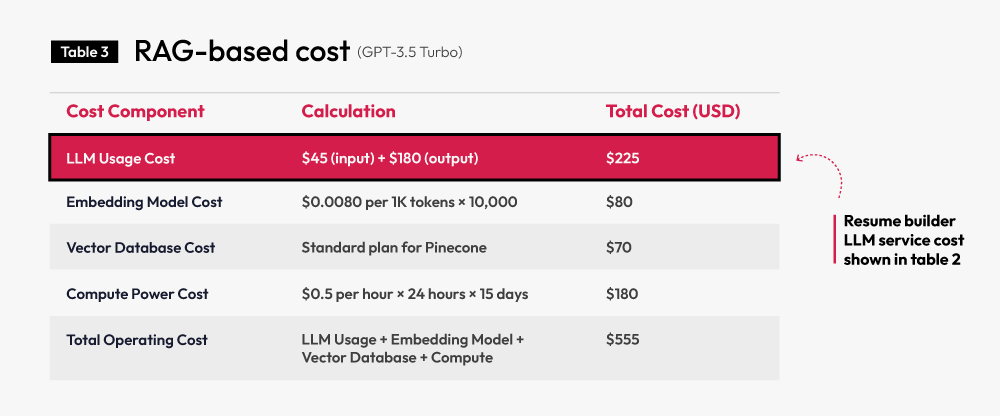

- RAG (Retrieval-Augmented Generation): RAG links LLMs with external knowledge sources, combining the retrieval of relevant documents or data with the model’s generation capabilities. This approach is ideal for tasks requiring up-to-date information or integration with large datasets. RAG implementation typically incurs higher costs due to the combined expenses of LLM usage, embedding models, vector databases, and compute power.

Key factors affecting generative AI investments (Annexure-1)

- Human Resources: Costs associated with salaries for AI researchers, data scientists, engineers, and project managers.

- Technology and Infrastructure: Expenses for hardware (GPUs, servers), software licensing, and cloud services.

- Data: Costs for acquiring data, as well as storing and processing large datasets.

- Development and Testing: Prototyping and testing expenses, including model development and validation.

- Deployment: Integration costs for implementing AI solutions with existing systems and ongoing maintenance.

- Indirect costs: Legal and compliance and marketing and sales.

LLM pricing

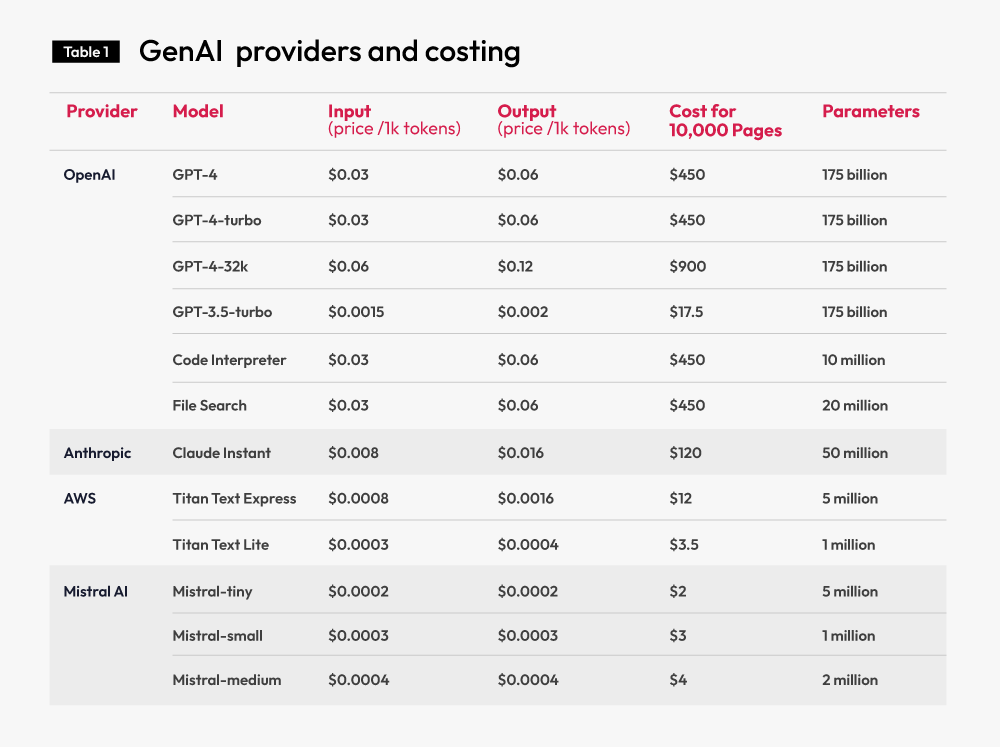

Once you choose the implementation method, you must decide LLM service (refer table 1 below) and then work on prompt engineering — that’s part of software engineering.

Commercial GenAI products work on a pay-as-you-go basis, but it’s tricky to predict their usage. When building new products and platforms, especially in the early stages of new technologies, it’s risky to rely on just one provider.

For example, if your app serves thousands of users every day, your cloud computing bill can skyrocket. Instead, we can achieve similar or better results using a mix of smaller, more efficient models at lower cost. We can train and fine-tune these models to perform specific tasks, which can be more cost-effective for niche applications.  In the above table 1, “model accuracy” estimates are not included because they differ based on scenarios and cannot be quantified. Also note that the cost may vary. This is the current (as of July 2024) cost listed on the provider’s website.

In the above table 1, “model accuracy” estimates are not included because they differ based on scenarios and cannot be quantified. Also note that the cost may vary. This is the current (as of July 2024) cost listed on the provider’s website.

Generative AI pricing based on the implementation scenario

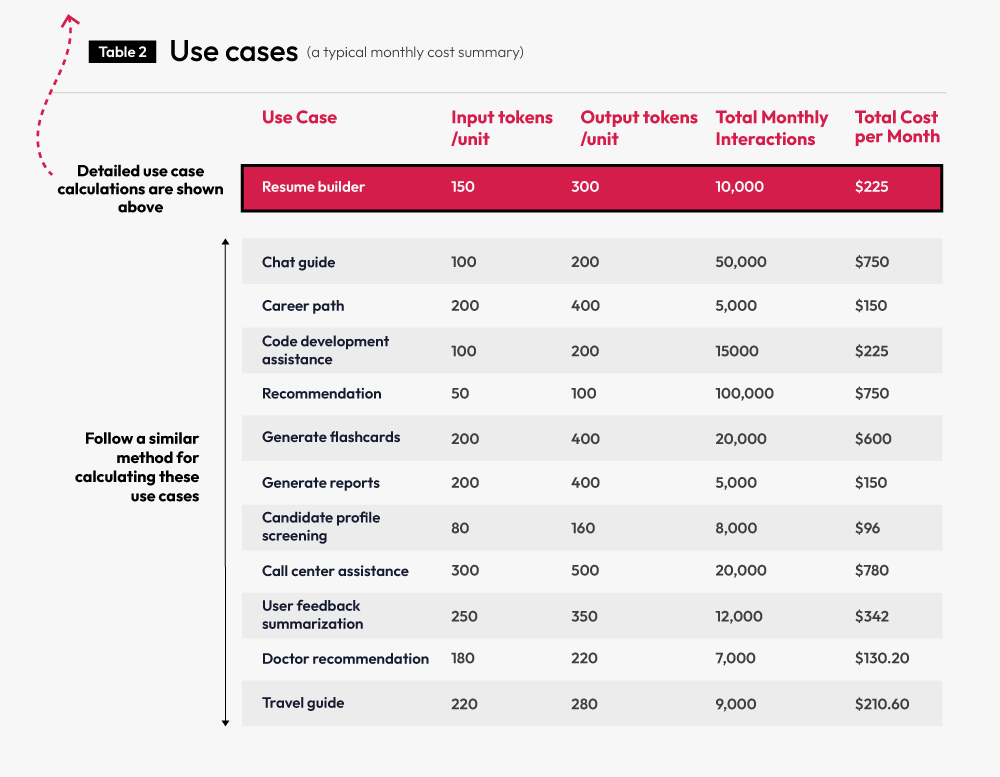

Let’s consider typical pricing for the GPT-4 model for the below use cases.

Here are some assumptions:

- We’re only dealing with English.

- Each token is counted as 4 letters.

- Input: $0.03 per 1,000 tokens

- Output: $0.06 per 1,000 tokens

Use case calculations – Resume builder

When a candidate generates a resume using AI, the system collects basic information about work and qualifications, which equates to roughly 150 input tokens (about 30 lines of text). The output, including candidate details and work history, is typically around 300 tokens. This forms the basis for the input and output token calculations in the example below.

Let’s break down the cost.

Total Input Tokens:

- 150 tokens per interaction

- 10,000 interactions per month

- Total Input Tokens = 150 tokens * 10,000 interactions = 1,500,000 tokens

Total Output Tokens:

- 300 tokens per interaction

- 10,000 interactions per month

- Total Output Tokens = 300 tokens * 10,000 interactions = 3,000,000 tokens

Input Cost:

- Cost per 1,000 input tokens = $0.03

- Total Input Cost = 1,500,000 tokens / 1,000 * $0.03 = $45

Output Cost:

- Cost per 1,000 output tokens = $0.06

- Total Output Cost = 3,000,000 tokens / 1,000 * $0.06 = $180

Total Monthly Cost:

Total Cost = Input Cost + Output Cost = $45 + $180 = $225

RAG implementation cost

Retrieval Augmented Generation (RAG) is a powerful AI framework that integrates information retrieval with a foundational LLM to generate text. In the case of resume builder use case, RAG retrieves relevant data based on the latest information without the need for retraining or fine-tuning. By leveraging RAG, we can ensure the generated resumes are accurate and up-to-date, significantly enhancing the quality of responses.

Fine tuning cost

It involves adjusting a pre-trained AI model to better fit specific tasks or datasets, which requires additional computational power and training tokens, increasing overall costs. For example, if we fine-tune the Resume Builder model to better understand industry-specific terminology or unique resume formats, this process will demand more resources and time compared to using the base model. Therefore, we are not including the cost for this use case.

Summary of estimating generative AI cost

To calculate the actual cost, follow these steps:

- Define use case: E.g. Resume builder

- Check cost of LLM service: Refer to table 1.

- Check RAG implementation cost: Refer table 3.

- Combine costs: LLM service, RAG cost, and calculate additional costs (Annexure-1) such as hardware, software licensing, development and other services.

The rough estimate would be somewhere between $150,000 to $2,50,000. These are just the ballpark figures. The costs may vary depending on your needs, LLM service, location, and market condition. It’s advisable to talk to our GenAI experts for a precise estimate. Also, keep an eye on the prices of hardware and cloud services because they keep updating.

You can check out some of our successful enterprise projects here.

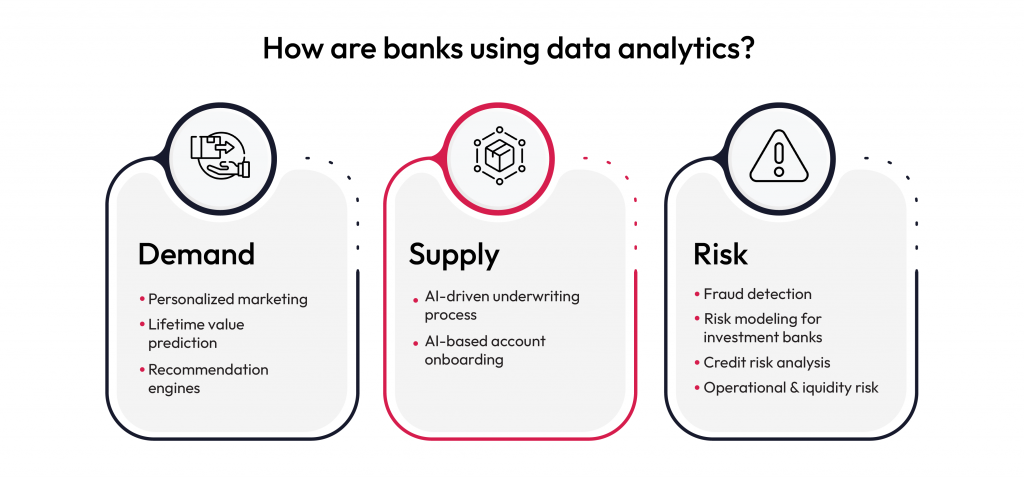

GenAI reducing data analytics cost

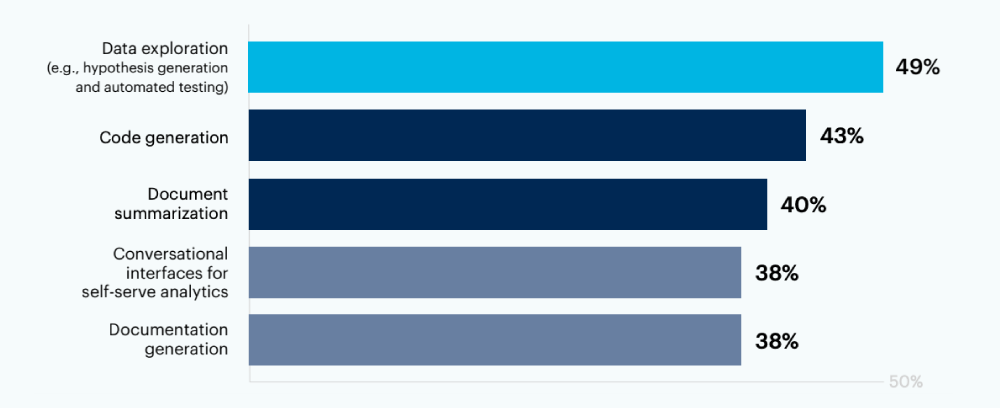

At Robosoft, we believe in data democratization—making information and data insights available to everyone in an organization, regardless of their technical skills. A recent survey shows that 32% of organizations already use generative AI for analytics. We’ve developed self-service business intelligence (BI) solutions and AI-based augmented analytics tools for big players in retail, healthcare, BFSI, Edtech, and media and entertainment. With generative AI, you can also lower data analytics costs by avoiding the need to train AI models from the ground up.

Image source: Gartner (How your Data & Analytics function using GenAI)

Conclusion

Generative AI investments aren’t just about quick financial gains; they require a solid data foundation. Deploying generative AI with poor or biased data can lead to more than just inaccurate results. For instance, if a company uses biased data in its hiring process, say gender or race, it could discriminate against certain people. In a resume-builder scenario, this biased data might incorrectly label a user, damaging a company’s reputation, causing compliance issues, and raising concerns among investors.

While we write this article, a lot is changing. Our knowledge about generative AI and what it can do might differ. However, our intent of providing value to customers and driving change prevails.