When Apple launched Siri in 2011, no one predicted the success of voice technology. 8 years later, almost 100 million mobile phones have a voice assistant. While Industry leaders such as Google and Amazon hold a major market share, voice technology is useful across varied industry channels. From banking to the corporate sector, every industry is observing an increasing number of voice integrations to fulfill customer demands.

Let’s explore why voice is popular, its applications, the technical aspects, and best practices.

Why Voice is Popular

The increased awareness, ever-changing user-demand, and improved user-services through voice integration have triggered a digital shift. The increasing need for speed, convenience, efficiency, and accuracy has added to the increased demand for voice optimization in mobile and other devices. Voice assistants are being integrated into several IoT devices such as appliances, thermostats, and speakers which offer an ability to connect to the user.

Here are some reasons why voice technology is popular in multiple operating devices.

- On average, we can speak 130 words per minute and write only 40 words per minute. This count can be more or less dependent on the user but the idea behind using voice is clear. The speed of speaking is 3 times more than the speed of writing. Users can save time that they have to spend typing.

- In 2012, the speech errors were 33%, and in 2017, it was reduced to 5%. Due to evolving and improving technology, voice search has become more accurate. So, whenever a user speaks something to instruct the device, it is highly likely for the device to understand the instructions clearly without errors.

- It is easier to access voice technology through hardware. Due to the high adoption rate and easy availability, several users have smart devices such as smartphones, smartwatches, and smart speakers. The accessibility of voice search and integration is also high, which justifies the popular demand.

Voice Assistant: Use Cases

Voice technology is not just valuable in the entertainment industry. Healthcare can benefit from voice integration as it will help patients manage their health data, improve claims management, and seamlessly deliver first-aid instructions.

Let’s explore further to find out where voice technology can be utilized.

Enterprise Support

Consider the following two scenarios, in which you will also find two separate use cases of voice for the enterprise.

- A sales representative is on the market run and he is making notes of the activities and important pointers as he hops from client to client. He is writing it all down but at the back of his mind, he is thinking of how he would have to populate the fields of his office software with this data at a later stage. This is tiring to even think of, right?

- Everybody is using office supplies and no one is making an effort to replace anything. This goes on until the inventory runs dry and there are no office supplies to use anymore. Assigning this task to a person is such a waste of manpower because his time can be better utilized.

In the first case, the sales representative can directly utilize the smartphone to populate the software field. As he speaks, everything is entered directly to the database, which can be edited later, but the major part of the job is immediately accomplished.

In the second case, Echo Dot can be utilized as a shared device to order inventory supply. You have to maintain the inventory levels and feasibility of the purchase but wouldn’t it be nice to ask Echo to order a box of pens?

Healthcare Support

Voice assistants can lead to seamless workflows in the healthcare industry. Here’s how:

- There are multiple healthcare devices to help you monitor your health. For example, a diabetes monitor which can be integrated into your Alexa. The solution can help you follow your medication, diet, and exercise properly – everything through voice.

- Similar to health management, non-adherence to medication is a reason for declining health. Voice control can be integrated into Alexa to remind elders to take medication on time.

- Alexa Skills are also being utilized to deliver first-aid instructions through voice. When in an emergency, you don’t have to read it, you only have to hear it and move ahead.

Banking Support

The application of voice technology in banking is obvious. You can check your account balance, make payments, pay bills, and raise a complaint. Although these are basic functionalities to help users accomplish the task in less time without any hassles.

In an advanced version, voice technology in banking is currently being explored in collaboration with NLP (Natural Language Processing) and machine learning. In the future, users can expect advanced support from the voice assistant. You can ask the assistant if you are investing in the right fund and the assistant will present statistics to help you understand the situation.

Automobile Support

Another known application of Alexa Skills and Google Voice is in the automobile industry. It is possible to utilize voice technology integration to offer roadside assistance. The voice commands will help you execute emergency automobile tasks without reading it online or even typing it in the search engine. You only have to call out your phone and ask for help.

Application Chatbots

Amazon Lex is a valuable service offered by Amazon to create voice and text conversational interfaces in an application. This service has a deep learning functionality is achieved through automatic speech recognition, which can automatically convert speech to text. Further, the Amazon Lex has natural language processing abilities which offer engaging customer interfaces for high user satisfaction. Both these features of Amazon Lex makes it easier for developers to create conversational bots in the application.

Google Voice and Alexa Skills

Alexa Skills and Google Voice are extensions that help build voice-enabled applications. These are the tools that enable us to make virtual assistants and speakers.

Alexa Skills

Image source

Image source

- In Alexa, headspace is a known skill. Headspace Campfire, Headspace Meditation, and Headspace Rain are all different skills.

- To invoke these skills, the user has to speak an invocation phrase. This can be anything but we are using Headspace as the invocation phrase as an example here. This phrase is necessary as your skills discovery is dependent on the phrase.

- It is compared on the web and searches with different utterances such as ask headspace, tell headspace or open headspace. Sometimes, it can trigger even when you say, “Alexa, I need to relax.”

- The intent of the voice command is to achieve the task a user is trying. Start meditation. The start is the intent here.

- If a user says start <game>, the start is the intent and <game> is the slot.

- It is also possible to execute a command with intent such as Alexa, launch headspace. This is similar to opening the home page on the browser.

- A Fulfilment is an API logic to execute the action or intent such as start <game>.

- Lastly, Alexa has its built-in intent which is not governed by an external function. If you say, “Alexa, stop,” that is an internal command.

Google Voice

Image source

Google Voice is similar to Alexa Skills with some minor differences:

- In Google Voice, headspace offers actions (similar to intent), conversation logic is a dialog flow, and the agent is at backends such as headspace meditation or headspace rain.

- Agent in Google accomplishes actions – “Ok Google, start headspace meditation.”

- You can also use “Ok Google, launch headspace” to start an action with no intent.

- Google has in-built intents like “Ok Google, help me relax” which gives Google several options and one of these is headspace.

VUX Design Research

When you are building actions or skills in Google or Alexa, it is necessary to know various research outcomes given below:

- Understand how different types of users are interacting with the platform or a specific category.

- Understand the intent of the user and know the why of using the voice assistant.

- Understand how actions and skills are performed with the help of different phrases or utterance.

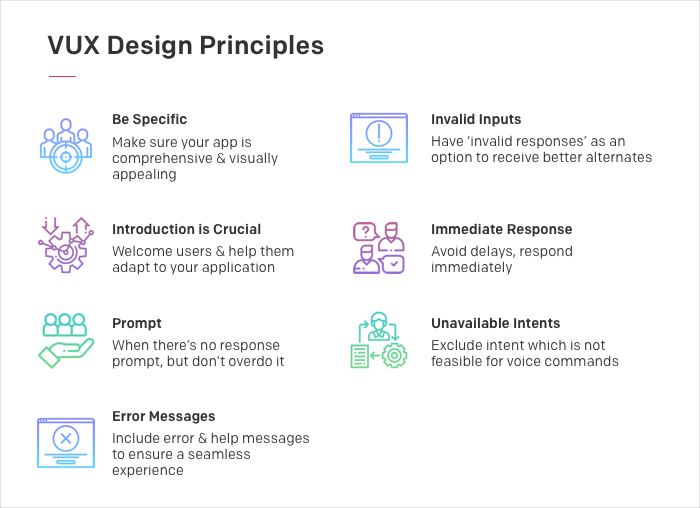

- Be Specific – Every user can consume more graphical information compared to voice. No one can sit through the voice commands for a long time. So, ensure that your app is visually appealing.

- The introduction is the Key – When the user starts the action with no intent, “Alexa, launch headspace,” introduce yourself. Welcome them and help them adjust to your application.

- Prompt Again – When the user stops responding, prompt again. But, do this without overdoing it, just a short re-prompt is enough.

- Include Error Messages – You need to include error and help messages to pass certification. For example, ask the user to keep the mic close for the response (tip).

- Include Invalid Inputs – When the voice command is unclear, have invalid responses stored for better interaction.

- Immediate Response – Avoid delays. You can’t give a 10-second speech before playing the song user wants. If the user says, “Play XYZ,” simply say “Playing XYZ” and just play. When the user says pause, Pause.

- Don’t Include Unavailable Intents – Do not include any intent on the app which is not feasible for voice commands. This can lead to certification rejection.

How to Set Up Voice Skills?

Alexa Skill

1. Start by signing in or logging in to your dashboard and then click on ‘Get Started’ under Alexa Skills Kit. After that, click ‘Add new skill’.

2. As we are creating a custom skill, click on the ‘Custom interaction model’ and add name and invocation name. Name is defined for your dashboard and invocation name is defined to activate the skill on Amazon Echo. Once you have entered the skill information, move to the interaction model and ‘Launch Skill Builder’. Here, add the intent name and utterances. The intent is the intention of the user behind a skill.

For example, if the intent is ‘Hello World’, utterances can be, ‘Hi’, ‘Howdy’, etc.

Save this model and go to ‘Configuration’ to switch to AWS lambda.

3. Now, go to the lambda page and select ‘Create function’. There are several templates for ‘Alexa-SDK’, in our example, we will use ‘Alexa-skill-kit-SDK-factskill’.

Enter the name of the function and select ‘Choose an existing role’ from the drop-down. The existing role is ‘lambda_basic_execution’.

In the ‘Add triggers’, you will find ‘Alexa skill kit’ which will help you add ‘Alexa skill kit’ trigger to the created lambda function.

4. Before moving forward, create a test function and copy the ARN endpoint for the next step.

5. Lastly, complete the process by going to ‘Configuration’ and completing the Endpoint section by selecting ‘AWS Lambda ARN’ and pasting the copied ARN here. Click on the ‘Enable’ switch and you are done.

Google Voice

1. Create a Dialogflow account or log in to an existing account. Click on ‘Create Agent’ and add details such as name, Google project, etc.

2. Go to Intent and click on ‘Create intent’. Give your intent a name and add utterances or sample phrases. (Similar to what we discussed above).

3. In the ‘Response’ field, type the message that you wish for your intent to respond with.

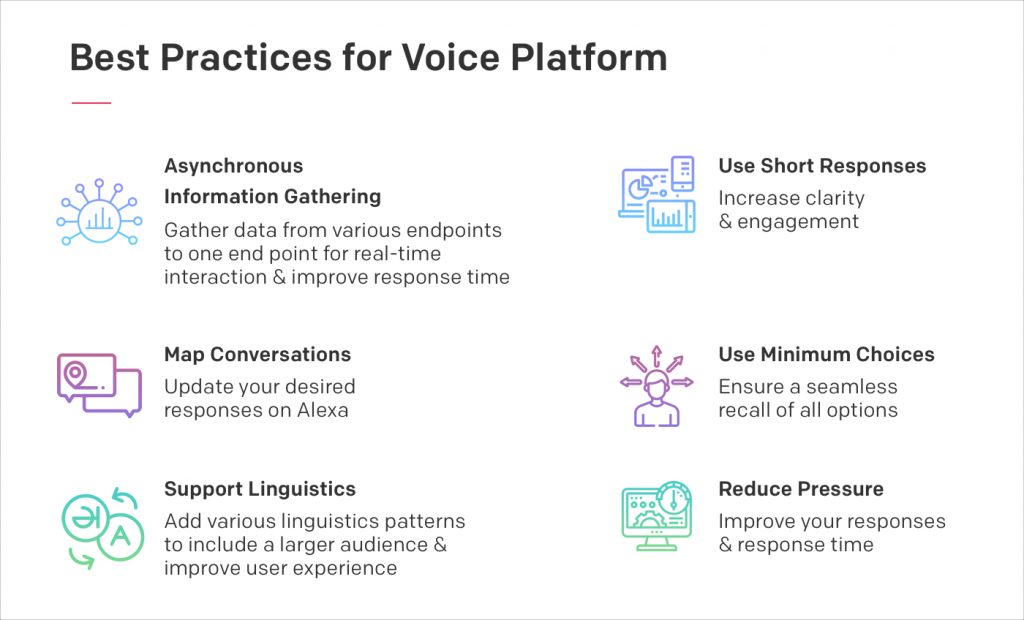

1. Asynchronous Information Gathering

Voice applications are hosted on the cloud and asynchronous gathering of information can improve response time. When extracting information, there are several data points, which are on different server endpoints. If there is latency, you can reduce the experience immediately. Your user would have to watch the Light on Alexa Spin until the data is fetched. This is why it is best to use an API to bring data from various endpoints to one endpoint for real-time interaction.

2. Mapping Conversations

Your answers on Alexa should be updated. If you are confused about what to add, look at the FAQ section of your website. If there are FAQs that can’t be added without graphical representation, it is best to not add these.

3. Support Linguistics

Different users can express themselves differently. For instance, you may ask, “Alexa, book a cab to XYZ,” but your friend may say, “Please book me a ride to the airport.” The difference in linguistics is natural to most of us and we don’t want to change it just to interact with an application. If the application itself adjusts to our linguistics, that is something useful. So, add these utterances in your voice assistant to improve the experience of your users.

4. Use Short Responses

If your responses are long, your users will soon lose interest. It is hard to concentrate when the voice assistant is delivering long commands. Users get impatient and leave the conversation in between. Ensure that your voice responses are short and sweet. Also, understand that the sentence that seems shorter in writing may be irritatingly long with voice. So, take extra measures to remove these responses.

5. Use minimum Choices

If you are giving your user choices, stick to maximum 3 choices. Fewer choices are always better, so keep your options to a minimum, always. If you have more choices, it would be hard for the user to recall 1st choice when the assistant is reading out 3rd or 4th choice.

6. Reduce Pressure

Reduce the pressure on the audience by improving your response time and responses. If your voice assistant stays quiet for a very short duration after speaking, you can put the user under stress. Allow a considerable time or even if you have to keep the wait time short, the next sentence should be supporting. For example, would you like me to repeat the list?

Challenges of Voice Assistants

Voice Tech is Ambiguous

Voice tech is often ambiguous because it fails to reveal what users can achieve with it. With visual tech, it is possible to extract functionality through buttons, images, labels, etc. However, something like this is not possible with voice.

Further, hearing something and retaining it is usually harder than reading and seeing something to retain it. That is why it is hard to know what could be the best way to deliver options if the application has several options for a particular action.

Privacy Concerns

Privacy is a reason of concern for every business and more so for ones that offer voice assistants.

Why? Let’s find out:

While Siri is programmed to your voice and it activates whenever you say ‘Hey Siri’, anyone can ask a question. Siri just doesn’t know the difference between your voice and someone else’s voice.

How it can impact us? Of course, it affects permissions.

Think of a child ordering several toys from Alexa. This would happen without parent authorization. This also means that anyone can misuse your credit card if they are anywhere near your Alexa. Voice technology and assistants are convenient but security concern is a major issue.

Conclusion

The consumer-led era today demands platforms and technologies that can simplify and step up the customer service experience. Voice technology is revolutionizing customer experience and enabling personalization at a whole new level. Proactive strategies based on customer feedback, browsing, and purchase trends have become much easier to implement, enforcing companies to upgrade their customer experience models backed by technology.

Factors like speed, convenience, and personalization are key differentiators that influence customer decisions and buying behavior. And Voice Technologies like, Google Voice and Alexa Skills are facilitating this process matchlessly. With most businesses switching to technology-based solutions, Voice Technology is likely to play a crucial role for organizations driven by customer service excellence.