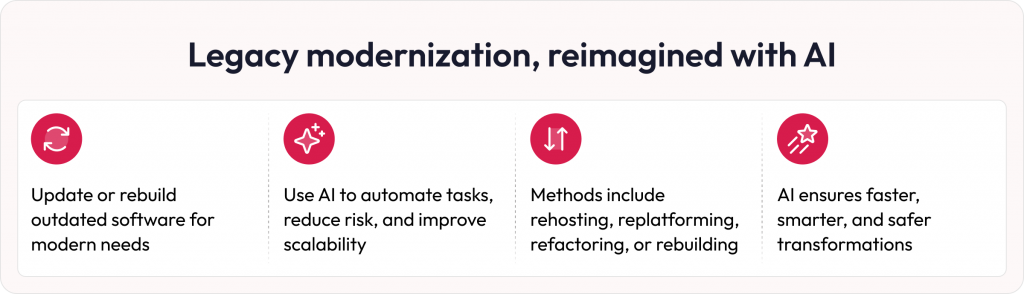

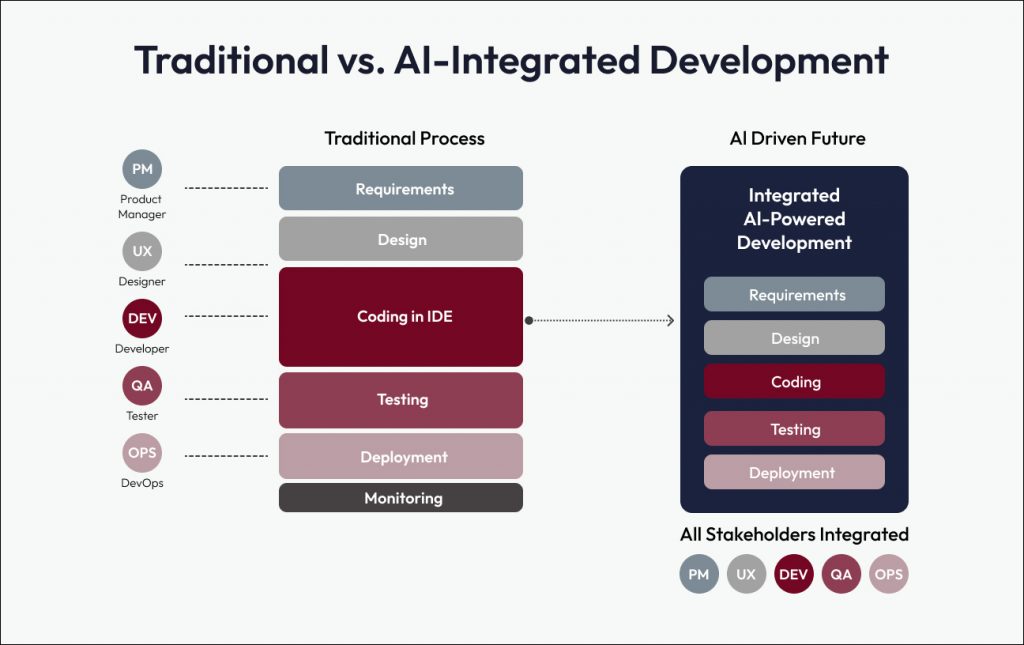

As digital products evolve into complex, interconnected systems, experience can no longer be treated as a surface layer applied at the end of development. It must be intentionally designed, rigorously built, and continuously improved. For leaders navigating platform modernization, digital transformation, and AI adoption, this shift is imperative.

At Robosoft Technologies, we call this Engineering Human Experiences, combining human insight, product thinking, and enterprise‑grade engineering to build digital systems that are meaningful, resilient, and built for impact.

The growing role of AI in design is accelerating this shift. Not by replacing creativity, but by changing how experiences are engineered, scaled, and governed across organizations.

What we increasingly see in enterprises is AI being added to the design workflow without changing how experience decisions are governed. The result is more output at higher velocity. However, this does not necessarily lead to better experiences, because consistency, accessibility, performance, privacy, and trust still depend on rigorous operating standards rather than tool adoption.

Experience is a system

Today’s digital experiences are shaped by far more than interfaces. They are shaped by intelligent systems driven by data and AI, platforms that must scale securely and reliably, and users who expect clarity, trust, reliability, and empathy at every interaction.

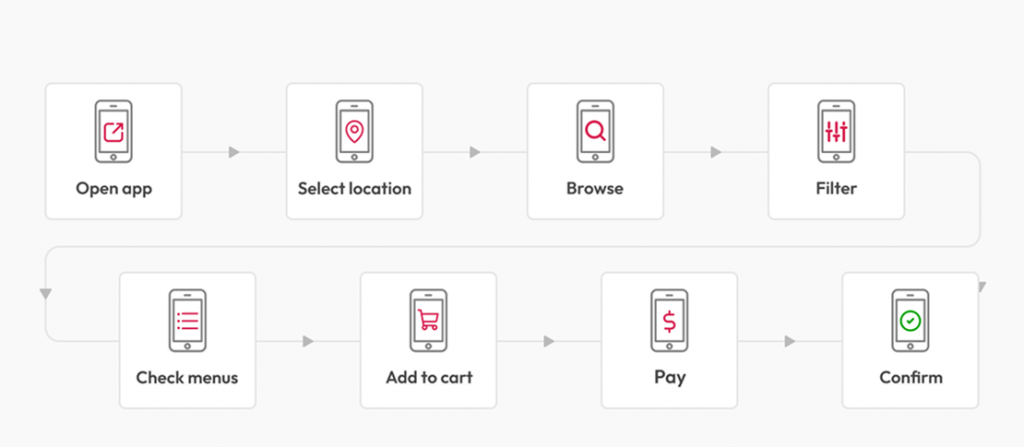

A travel rebooking, a password reset, or a content search through filters are all moments of user interaction, in which the system demonstrates whether it understands intent, respects data, and makes discovery effortless. Design choices inside these moments are engineering choices; they either strengthen the system or create friction.

In this context, experience is not a deliverable. It is a system. AI in design makes that system more observable and adaptable, but only when it is backed by robust engineering practices.

Design decisions now directly affect:

- Performance: latency, responsiveness, system load

- Accessibility: inclusive experiences across users and contexts

- Compliance: regulatory and data obligations

- Long‑term scalability: consistency across platforms and regions

Treating experience as an engineering discipline is no longer optional; it is essential.

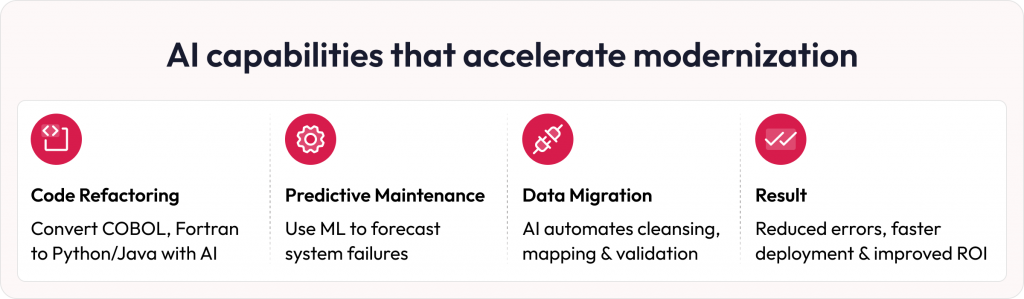

How AI strengthens the design lifecycle

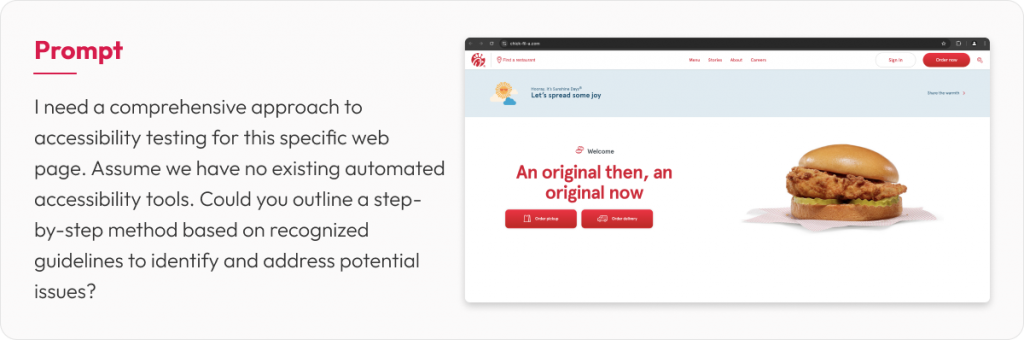

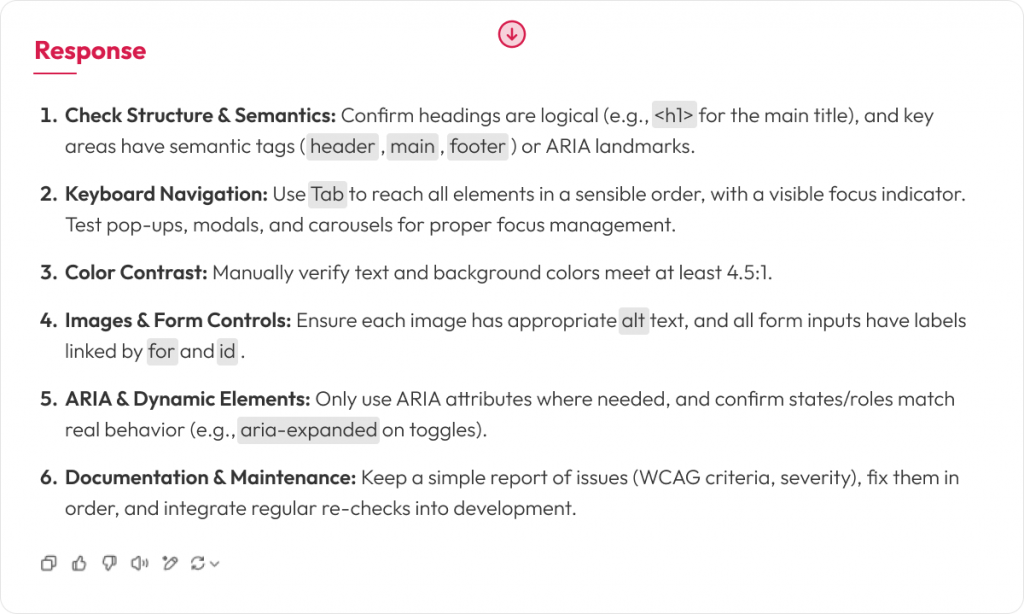

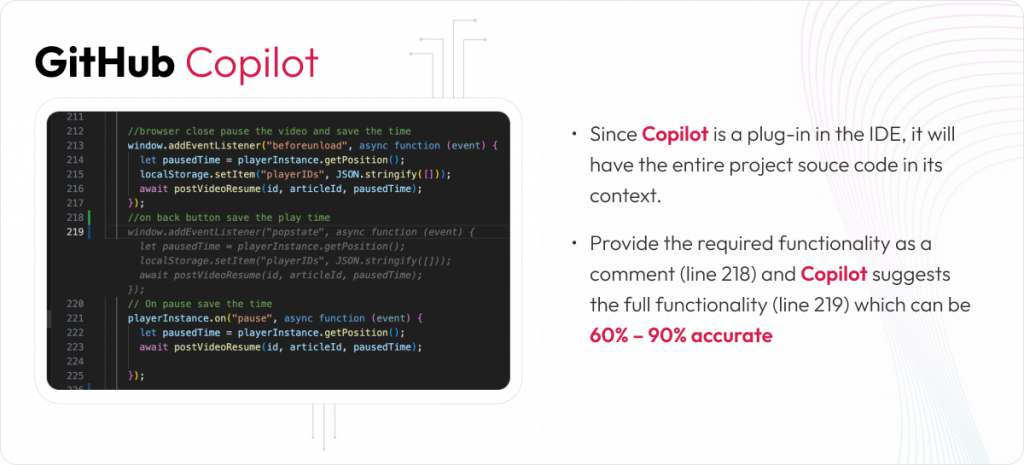

AI is increasingly embedded across the design lifecycle, from research and exploration to validation and optimization. When applied thoughtfully, AI improves the operating quality of design by shortening feedback loops and making experience performance observable across systems, not just screens.

In practice, teams can interrogate behavioral signals at scale, pressure‑test more variants across more user contexts, and surface experience risks earlier before they become expensive fixes in engineering or support. AI also strengthens design‑system consistency by detecting drift across components, patterns, and platforms as products scale.

In delivery, AI can surface accessibility risks, suggest performance‑aware alternatives for latency‑sensitive flows, and support safer testing. The impact is not simply faster design but fewer late‑stage rework cycles, more predictable releases, and higher experience consistency across teams and products.

AI in design and the role of judgement

AI in design does not automatically create better experiences. Without human context, product judgment, and engineering rigor, AI‑generated outputs risk becoming generic, fragmented, or misaligned with real user needs.

AI tools are effective at optimizing for what has worked before. Still, they do not understand emotional nuance, situational stakes, or trust dynamics, for example, a user making a high‑risk financial or procurement decision. Those moments require human judgment: understanding why users behave the way they do and what truly matters in context.

What engineering human experiences actually mean

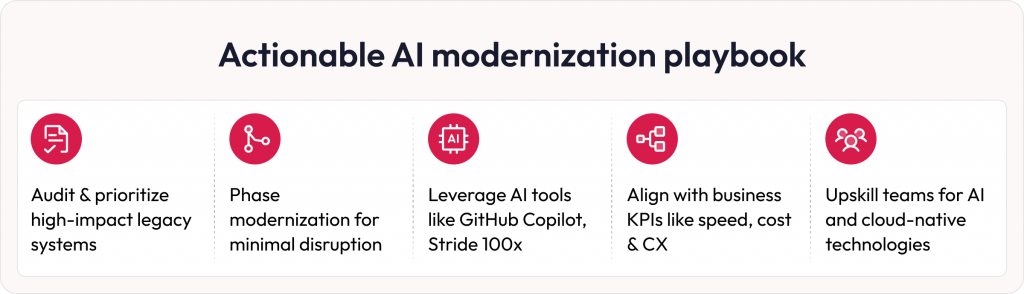

At Robosoft, we believe the future of design is grounded in engineering human experiences, not automating creativity. This means designing with empathy and purpose, building scalable and resilient systems, embedding quality, privacy, and performance from the start, and ensuring experiences can evolve responsibly over time.

AI for Design and Design for AI

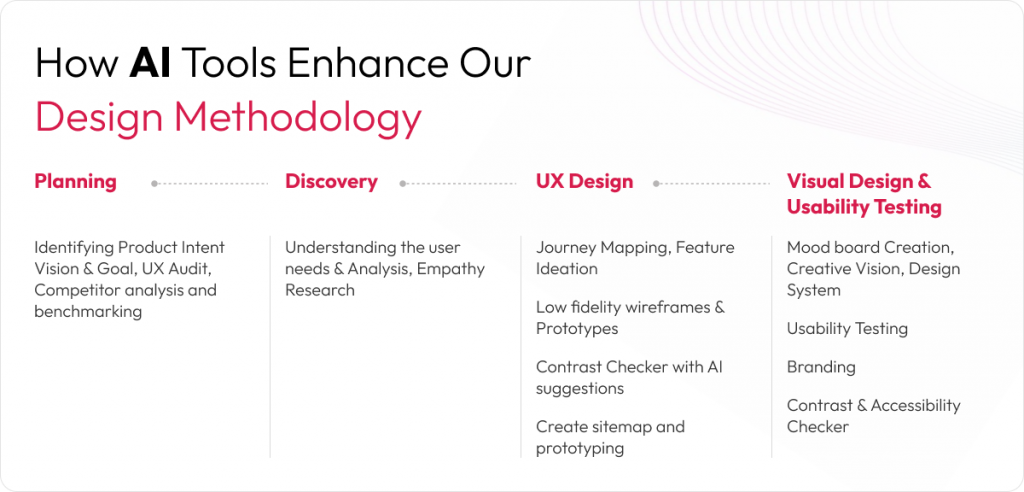

At Robosoft, we approach AI and experience design through two complementary lenses, and we actively work across both:

AI for Design

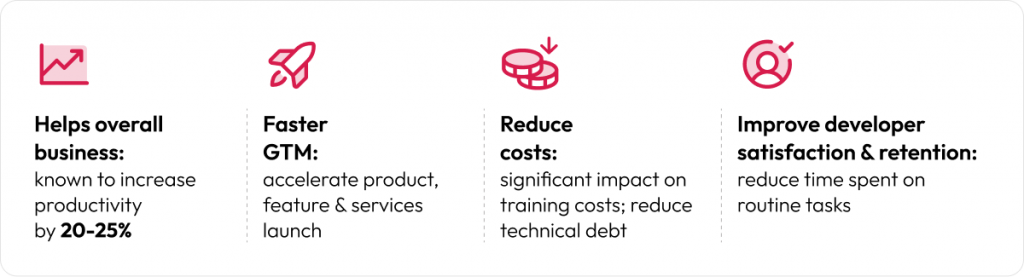

Using AI to make the design process more efficient and productive by accelerating research, exploration, validation, and design‑system consistency so teams can focus on higher‑order decisions and complex experience challenges.

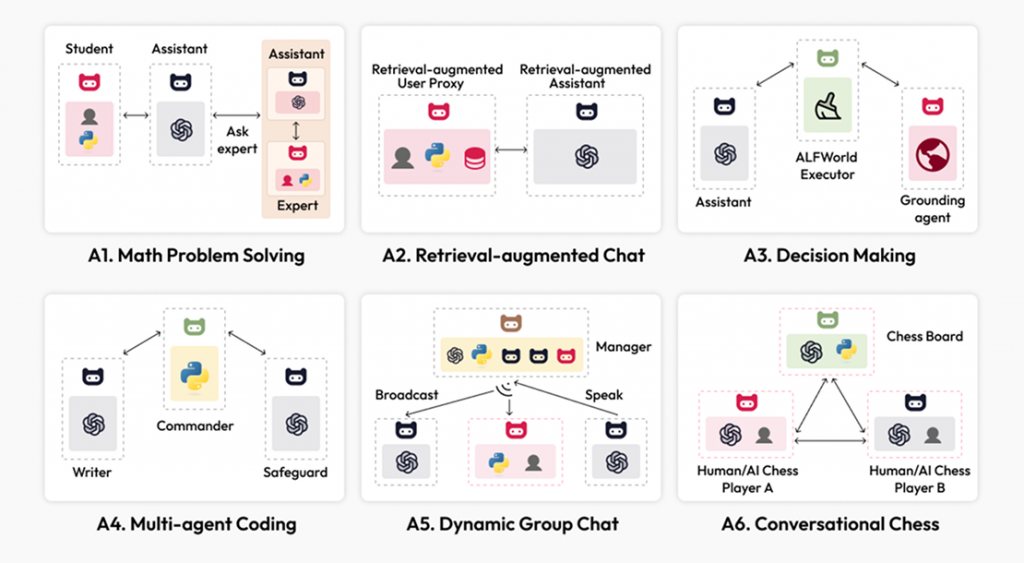

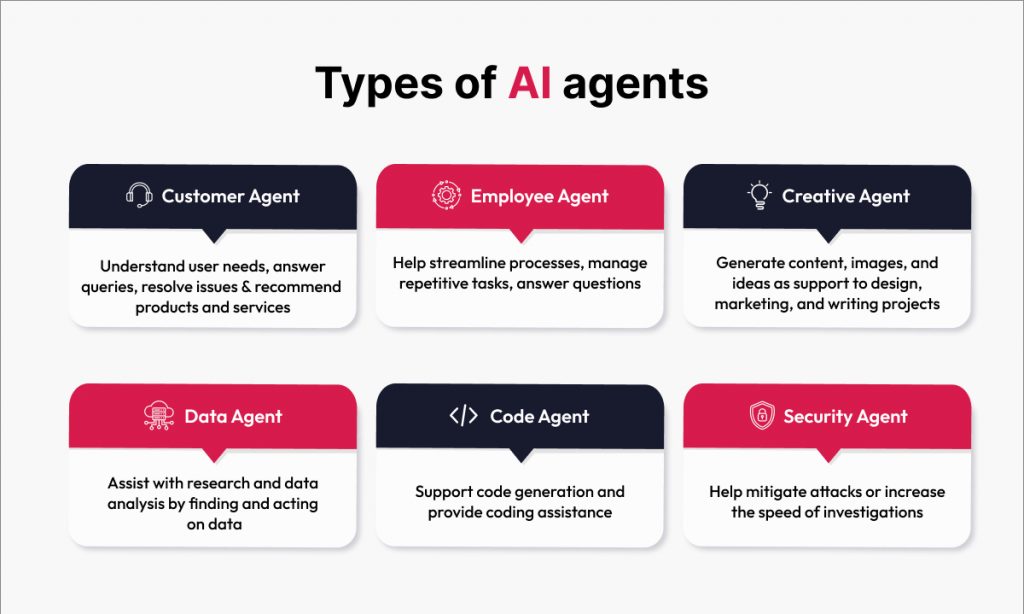

Design for AI

Designing AI‑native products and platforms, including experiences that support agentic behavior responsibly. This means designing how users set intent, how autonomy is bounded, how exceptions are handled, and how trust is maintained through clarity, control, and accountability.

Both are required. AI for Design improves productivity. Design for AI determines whether AI‑enabled experiences remain usable, governable, and trusted at scale.

The disciplines behind engineered experiences

In practice, engineering human experiences rests on four disciplines:

- Empathy: deep research that goes beyond usability to understand behavioral and emotional context

- Engineering rigor: treating accessibility, performance, privacy, and compliance as design requirements

- Governance: principles, standards, and controls that scale across teams and products

- Accountability: connecting design decisions to measurable business outcomes

AI becomes a powerful enabler within this framework, accelerating insight and execution, while engineering ensures trust, reliability, and durability.

Why scale makes governance a differentiator

As organizations scale, isolated design efforts break down quickly. Experience must operate across products, platforms, devices, regions, regulations, and user segments.

AI in design can increase adaptability and consistency at scale, but scale also raises the cost of inconsistency. Governance becomes a performance advantage: clear principles, shared standards, and engineering‑grade quality controls that hold across teams, products, and markets.

This is where experience, software, data, and AI must operate as one system.

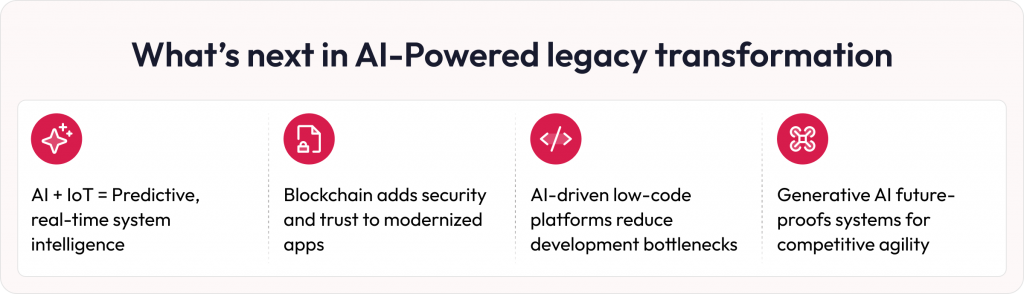

The path forward

AI in design will continue to evolve. Tools will change. Expectations will rise. What will endure is the need to engineer experiences that solve real user problems and drive measurable business outcomes.

For leaders evaluating how AI can strengthen digital experiences without weakening trust, clarity, performance, or governance, the practical question is whether the organization is building both:

- AI for Design: productivity in how experiences are created

- Design for AI: AI‑native experiences that remain accountable at scale

Robosoft Technologies partners with enterprises to engineer human experiences by combining human insight, product thinking, and enterprise‑grade engineering, so that experience quality improves as systems scale, and AI adoption deepens.

If you’re looking to embed this approach into your transformation vision to engineer human experiences at scale, we’d be glad to start that conversation.