At Robosoft Technologies, we work with global enterprises navigating the shift from digital transformation to enterprise‑scale AI adoption. Across industries, we see a consistent pattern: while Generative AI has delivered measurable productivity gains, many organizations struggle to convert AI‑driven intelligence into execution at scale.

Our experience building and scaling complex digital platforms where experience design, engineering, data, and operations converge has shown us that the next phase of enterprise AI will not be defined by better answers alone. It will be defined by AI systems that can act, adapt, and execute responsibly within real business environments.

This perspective shapes how we view the growing distinction between Generative AI and Agentic AI, and why this distinction is becoming increasingly important for enterprise leaders.

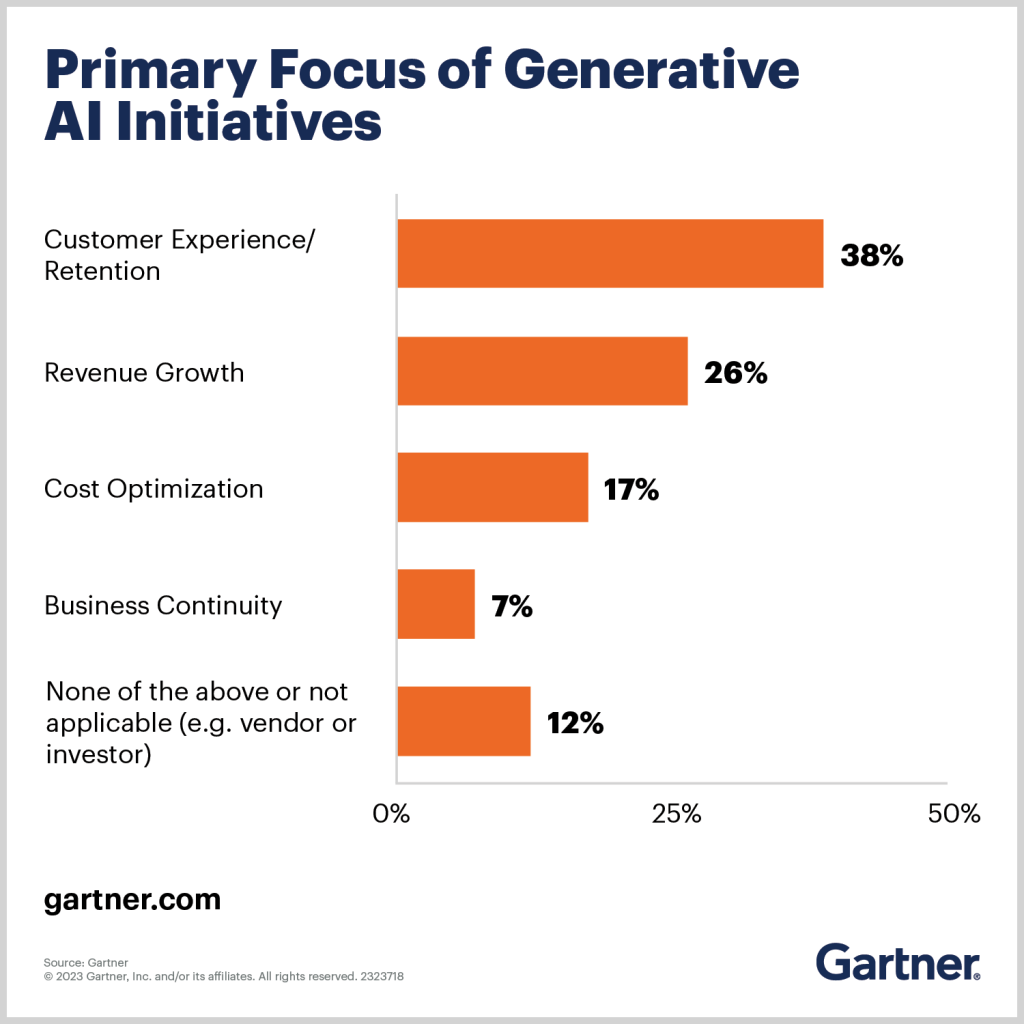

Over the past two years, Generative AI adoption has accelerated rapidly. AI copilots, chatbots, and content engines are now embedded across marketing, customer service, product development, and IT teams. These systems have improved speed, efficiency, and access to information. Yet for many CIOs and digital leaders, a fundamental limitation is becoming clear: execution still depends heavily on humans.

Generative AI can generate insights, content, and recommendations, but people continue to coordinate workflows, manage handoffs, resolve exceptions, and drive outcomes across enterprise systems. The gap between AI‑generated output and business execution remains largely manual.

This execution gap has led to the emergence of Agentic AI, a new class of AI systems designed to move beyond generation and into autonomous execution. As enterprises shift from AI experimentation to scaled deployment, understanding the difference between Agentic AI and Generative AI is no longer optional.

To understand generative AI vs agentic AI, it helps to start with what Generative AI does best.

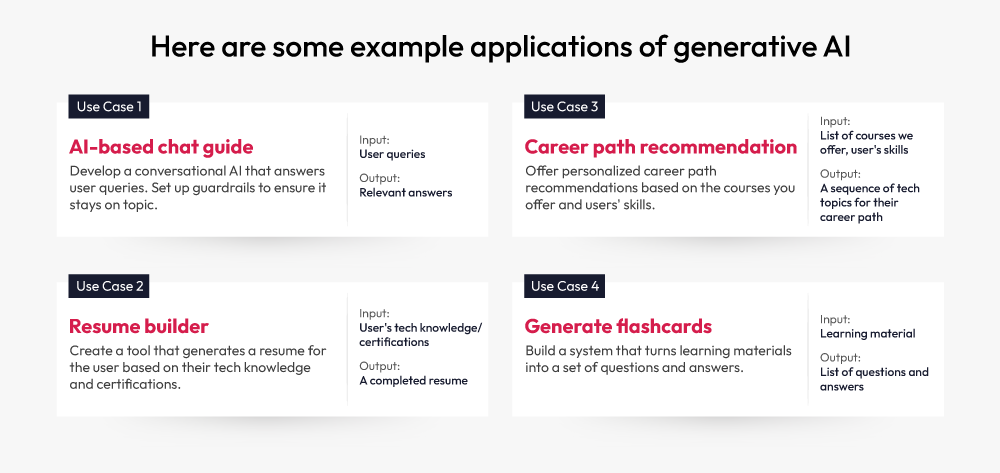

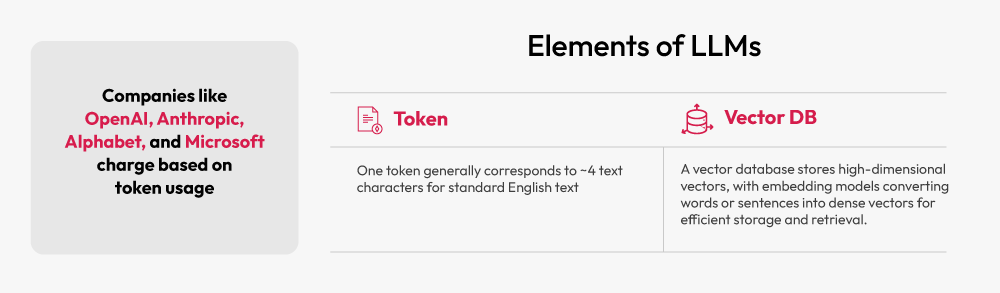

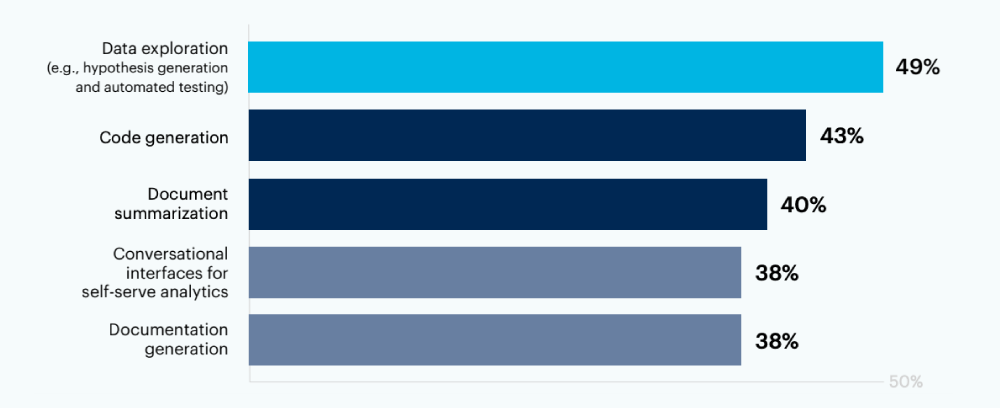

Generative AI represents a major advancement in how organizations work with data and knowledge. It excels at creating text, code, images, and summaries, and delivers value across customer support, content creation, personalization, and software development. Its core strength lies in fluency and pattern recognition.

However, Generative AI is inherently reactive and prompt‑driven. It operates within defined tasks and requires human direction to move work forward. While it augments individual productivity, it does not independently plan or execute multi‑step business processes. As a result, it enhances work but does not fundamentally change how workflows through the enterprise.

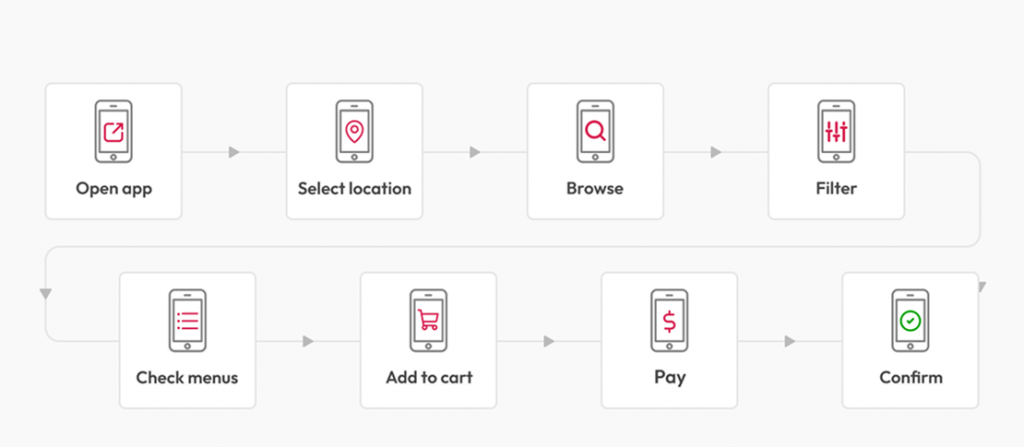

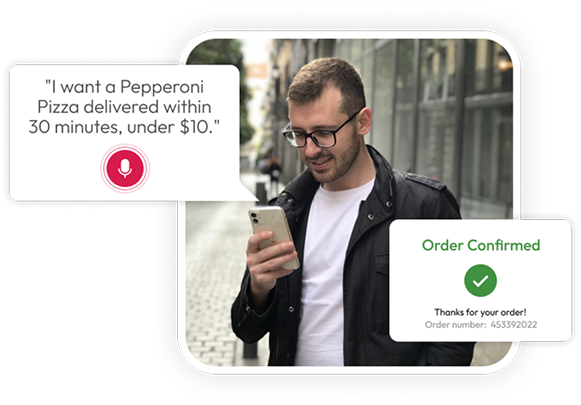

Agentic AI builds on Generative AI and machine learning but introduces goal‑directed autonomy. Instead of responding to prompts, agentic systems are designed to understand objectives, decompose them into tasks, and initiate actions across enterprise applications. These systems can adapt to changing conditions, retain context over time, and learn from execution outcomes.

In practice, Agentic AI behaves less like an assistant and more like a digital execution layer. It can take a business goal, determine the required steps, interact with APIs and data sources, monitor progress, and adjust its approach in real time. Over time, it builds institutional memory and improves decision‑making.

The distinction becomes clear in real enterprise use cases. In sales operations, Generative AI may draft outreach emails or summarize account data. Agentic AI can go further researching prospects, prioritizing leads, personalizing communication, updating CRM systems, scheduling meetings, and escalating risks autonomously.

In procurement, agentic systems can evaluate suppliers, compare quotes, generate purchase orders, and trigger approvals. In recruitment, they can screen candidates, schedule interviews, and manage follow‑ups. In finance and compliance, they can support audit preparation, policy checks, and exception handling.

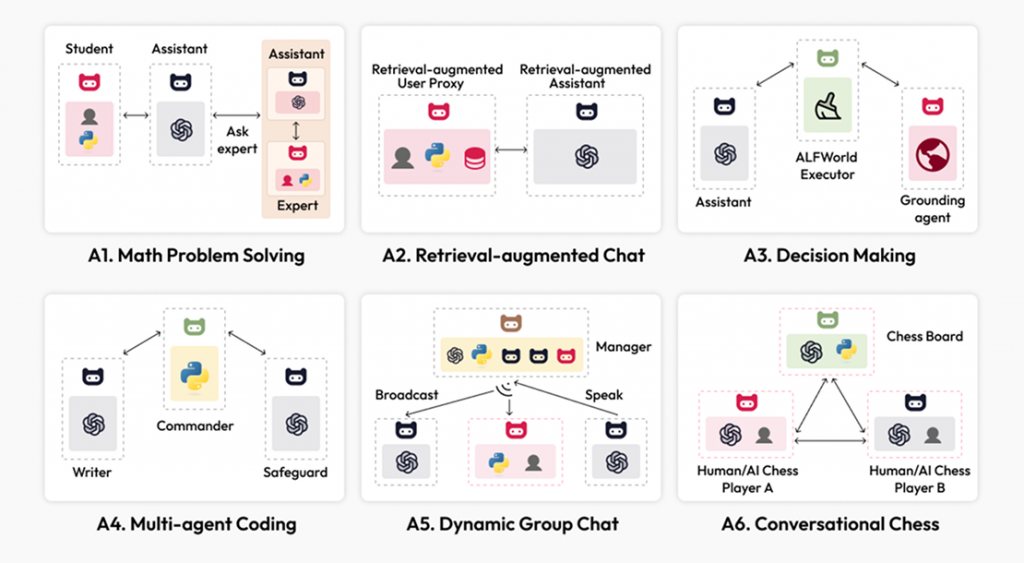

These are not isolated automations. They represent end‑to‑end workflow ownership. In more advanced scenarios, multiple AI agents operate together as a coordinated system. One agent may monitor inventory, another forecast demand, another negotiate with vendors, and another manage logistics. These agents share context, collaborate, and adapt dynamically much like a cross‑functional human team.

For enterprise leaders, this distinction directly impacts AI investment strategy. Generative AI is best suited for use cases focused on creation, insight, and communication. Agentic AI becomes essential when the objective is execution, coordination, and measurable business outcomes.

Organizations that rely solely on Generative AI will continue to see incremental productivity gains. Those that adopt Agentic AI will begin to reshape operating models, reduce decision latency, and lower the cost of coordination. This marks a shift from AI‑assisted work to AI‑enabled execution.

Agentic AI also introduces new challenges. These systems are still maturing and require careful architectural design. Persistent memory, planning logic, orchestration, monitoring, and fail‑safe mechanisms are critical. As autonomy increases, governance, accountability, transparency, and human oversight become even more important.

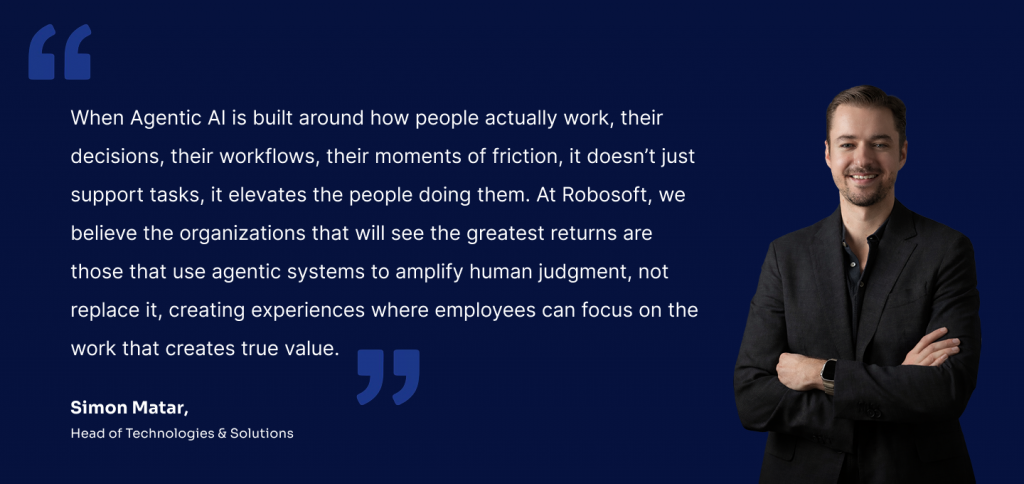

At Robosoft, we don’t look at Agentic AI as just another technology layer it’s a transformational enterprise capability. Through our work across digital experience, platform engineering, and data ecosystems, we’ve seen that the real value of autonomous AI doesn’t come from adding more agents. It comes from knowing where autonomy truly drives leverage and where human judgment must remain at the center.

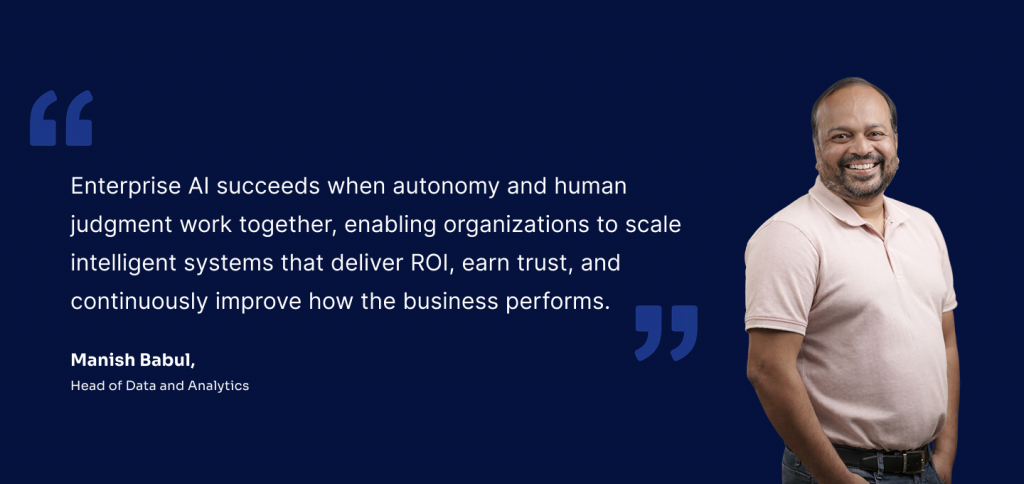

AI for the enterprise must be designed to scale, integrate, and prove measurable ROI. Without that, Agentic AI risks becoming just a collection of isolated agents solving narrow tasks useful, but not a real differentiator for the business.

That’s why we focus on reimagining how workflows through the organization, rather than simply layering AI on top of existing processes. We combine Generative AI for insight and expression with Agentic AI for execution, built on architectures that are scalable, governed, secure, and aligned to tangible business outcomes.

The next phase of enterprise AI won’t be defined by who can generate the best answers, it will be defined by who can execute better, faster, and more intelligently at scale, with AI embedded into the operating fabric of the organization.

For enterprise leaders, the question is no longer whether to adopt AI. The real question is how to design AI systems that can operate responsibly at scale, deliver measurable value, and continuously improve over time.

At Robosoft, we partner with CIOs and digital leaders to identify where autonomy can create meaningful impact across workflows, platforms, and customer journeys while ensuring the right balance of governance, control, and human oversight.

If you’re rethinking how AI fits into your operating model and want to move beyond experimentation toward enterprise-grade, ROI-driven AI, we’d be glad to start that conversation.