AI in software testing transforms how software is planned, built, and maintained. It simplifies testing workflows, significantly enhancing productivity and efficiency across teams:

- For QA teams: automate regression tests, focus on exploratory work, and avoid script maintenance.

- For developers: accelerate test automation with a minimal learning curve.

- For business analysts & PMs: quickly create and run tests without coding or extensive training.

This blog explores how AI is helping Quality Assurance (QA) by speeding up test case generation, improving regression testing accuracy, and enhancing predictive test analysis.

→ More about how AI is transforming the Software Development Lifecycle (SDLC).

AI-driven methods in automation testing

AI in software testing brings speed, accuracy, and adaptability to an often-complex process. Let us look at a few AI-driven methods that help teams deliver value:

- Self-healing automation

Frequent code changes can break traditional test scripts, draining time and resources. With self-healing automation, AI instantly updates these scripts, reducing manual intervention and ensuring tests remain accurate as the application evolves.

- Intelligent regression testing

Validating old features after introducing new ones can be time-consuming. AI automates regression tests based on code changes, accelerating test cycles and freeing teams to focus on strategic, creative problem-solving.

- Defect analysis and scheduling

Machine learning identifies high-risk areas in the code and prioritizes critical test cases. This approach ensures testing efforts go where they matter most while intelligent scheduling optimizes resources for maximum efficiency.

Challenges and considerations in integrating AI in software testing

With AI-driven programming assistants like GitHub Copilot, Amazon CodeWhisperer, and Tabnine, teams can automate repetitive tasks, reduce human errors, and improve software quality while maintaining rapid release cycles. However, AI is not a silver bullet—it enhances workflows but still requires thoughtful integration into development processes. AI complements human expertise but isn’t a standalone solution. It excels in automation and pattern recognition but requires human oversight for context and judgment.

1. Data dependency

AI-driven testing thrives on vast, high-quality datasets. Poor training data may result in unreliable test recommendations. However, sourcing, curating, and maintaining these datasets is time-intensive, adding complexity to AI integration in software development.

2. Demand for skilled AI developers

AI enhances efficiency, but harnessing its full potential requires expertise. Skilled AI developers are essential to fine-tune models, interpret results, and optimize AI-driven testing. As demand for AI specialists rises, organizations face challenges acquiring the right talent to drive innovation.

3. Adapting to AI-driven workflows

Shifting from traditional testing to AI-based approaches requires flexibility. Teams accustomed to manual testing may hesitate to adopt AI tools. Training and real-world demonstrations help bridge this gap.

4. Safeguarding data and privacy

When sensitive information is involved, security and compliance become paramount. Especially in heavily regulated industries, teams must ensure the AI tools they use protect proprietary data and meet all legal requirements.

5. Addressing technical and resource needs

Deploying AI-driven testing at scale requires thoughtful investment. It may necessitate software upgrades or enhanced hardware capabilities. While AI adoption requires upfront investment in training and resources, its efficiency gains make it a strategic asset over time.

Key tasks that AI can automate

AI can quickly learn repetitive tasks and apply them across multiple workflows, reducing overhead and speeding up quality checks. These tasks include:

- Identify code changes and select critical tests to run.

- Automatically building test plans.

- Updating test cases whenever small code changes occur.

- Planning new test cases and execution strategies.

- Generating test cases for specific field types.

- Automating similar workflows after learning from one scenario.

- Deciding which tests should run before each release.

- Creating UI-based test cases for different components.

- Generating load for performance and stress testing.

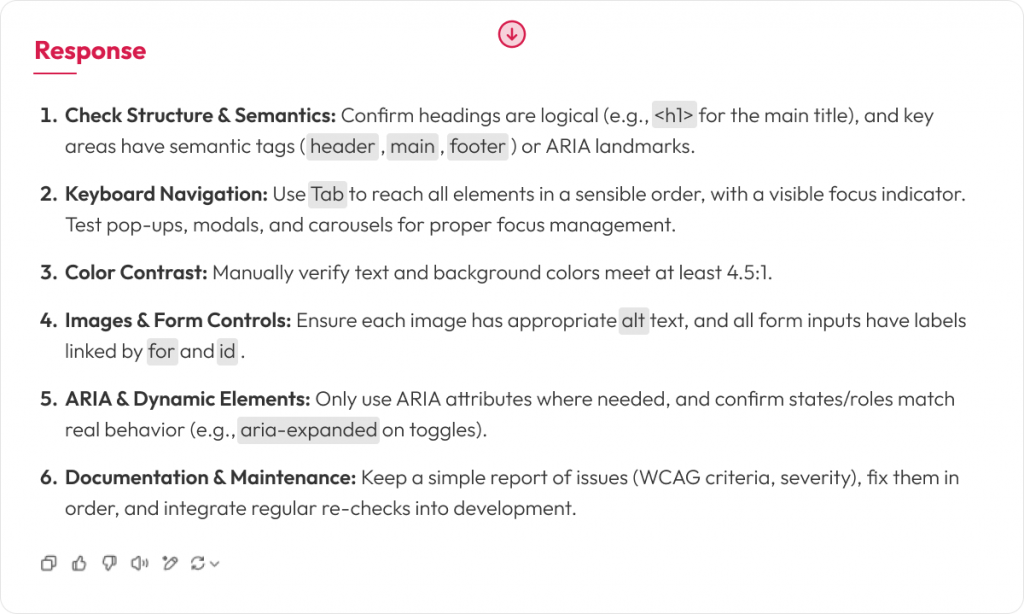

Below is an example of using GenAI for WCAG accessibility checks. It generates multiple scenarios and elevates the overall quality of testing.

AI in unit testing: a game-changer for developers

One of the most impactful applications of AI in software testing is automated unit test generation. Writing unit tests is often deprioritized due to time constraints yet skipping them can introduce hidden defects. AI-driven programming assistants help by automatically generating comprehensive test cases, ensuring better test coverage without additional developer effort.

Tools such as TestGrade and LambdaTest are also expanding AI’s role in integration testing. By identifying potential issues before deployment, AI-powered automation reduces regression bugs and enhances overall software reliability.

AI in regression testing

Regression testing validates whether new code has unintentionally broken existing functionality, an essential safeguard as frequent releases become the norm. For CTOs managing large portfolios, this process often balloons in cost and effort with traditional, manual methods.

By integrating AI, enterprises dramatically cut the overhead of traditional regression testing. AI tools automatically identify test scenarios, generate scripts, and adapt to code changes, minimizing manual maintenance. Predictive analytics flag high-risk areas, letting teams focus on the most critical components. As a result, testing cycles become faster and more accurate, accelerating time-to-market while reducing overall costs and risk.

Using AI agents in software testing for greater efficiency

AI is entering a new phase defined by AI assistants (reactive systems that respond to user prompts) and AI agents (proactive systems that autonomously strategize and accomplish tasks). Agents handle tasks like test case generation, test execution, and issue identification. By leveraging NLP, these agents convert simple prompts into automated scripts and adapt to changes with self-healing features, reducing manual intervention and enabling continuous feedback in CI/CD pipelines.

Their real efficiency boost comes from running tests around the clock, in parallel, and at scale—covering more scenarios faster than any human team. By analyzing past data, AI agents pinpoint high-risk areas and optimize test coverage. The result is shorter test cycles, lower costs, and more reliable software releases that keep pace with evolving user and market demands.

The future of AI in testing: what’s next?

Looking forward, AI is set to become more sophisticated in software testing. Bug detection, code refactoring, and automated debugging are areas where AI will have a greater impact. We are also seeing early capabilities in AI-assisted language migration, where code can be translated from one programming language to another—such as Ruby on Rails to Java.

However, adopting AI tools should not be a knee-jerk reaction. It’s critical to select tools that align with development environments and technology stacks while ensuring they integrate seamlessly with existing workflows. AI adoption should be a strategic decision, not a reaction to industry trends.

Also check the following articles for a deeper dive into future:

Final thoughts

The role of AI in software testing should be seen as augmentative rather than a replacement for skilled testers and developers. While AI is still evolving, its future impact on software engineering will be profound.

Integrating AI in the software development life cycle isn’t just a technological upgrade. It’s a strategic shift that accelerates release cycles, reduces costs, and sustains quality across the SDLC. From automated test creation to intelligent bug detection, AI empowers QA teams, developers, and business stakeholders alike to move faster without sacrificing precision.

If you want to enhance your QA processes or learn more about practical AI applications in development, now is the time to explore your options. Whether pilot projects or full-scale adoption, our team can help you identify the best path forward to see a real, measurable impact on software quality.